Spark: Difference between revisions

No edit summary |

No edit summary |

||

| Line 50: | Line 50: | ||

module load spark | module load spark | ||

Set Spark parameters for Spark cluster | ## Set Spark parameters for Spark cluster | ||

export SPARK_LOCAL_DIRS=$HOME/spark/tmp | export SPARK_LOCAL_DIRS=$HOME/spark/tmp | ||

export SPARK_WORKER_DIR=$SPARK_LOCAL_DIRS | export SPARK_WORKER_DIR=$SPARK_LOCAL_DIRS | ||

| Line 61: | Line 59: | ||

export SPARK_NO_DAEMONIZE=true | export SPARK_NO_DAEMONIZE=true | ||

export SPARK_LOG_DIR=$SPARK_LOCAL_DIRS | export SPARK_LOG_DIR=$SPARK_LOCAL_DIRS | ||

mkdir -p $SPARK_LOCAL_DIRS | mkdir -p $SPARK_LOCAL_DIRS | ||

Set Spark Master and Workers | ##Set Spark Master and Workers | ||

MASTER_HOST=$(scontrol show hostname $SLURM_NODELIST | head -n 1) | MASTER_HOST=$(scontrol show hostname $SLURM_NODELIST | head -n 1) | ||

export SPARK_MASTER_NODE=$(host $MASTER_HOST | head -1 | cut -d ' ' -f 4) | export SPARK_MASTER_NODE=$(host $MASTER_HOST | head -1 | cut -d ' ' -f 4) | ||

export MAX_SLAVES=$(expr $SLURM_JOB_NUM_NODES - 1) | export MAX_SLAVES=$(expr $SLURM_JOB_NUM_NODES - 1) | ||

# for starting spark master | |||

## for starting spark master | |||

$SPARK_HOME/sbin/start-master.sh & | $SPARK_HOME/sbin/start-master.sh & | ||

# use spark defaults for worker resources (all mem -1 GB, all cores) since using exclusive | |||

#for starting spark worker | ## use spark defaults for worker resources (all mem -1 GB, all cores) since using exclusive | ||

## for starting spark worker | |||

$SPARK_HOME/sbin/start-slave.sh spark://$SPARK_MASTER_NODE:$SPARK_MASTER_PORT | $SPARK_HOME/sbin/start-slave.sh spark://$SPARK_MASTER_NODE:$SPARK_MASTER_PORT | ||

</source> | </source> | ||

Revision as of 19:43, 24 May 2018

Description

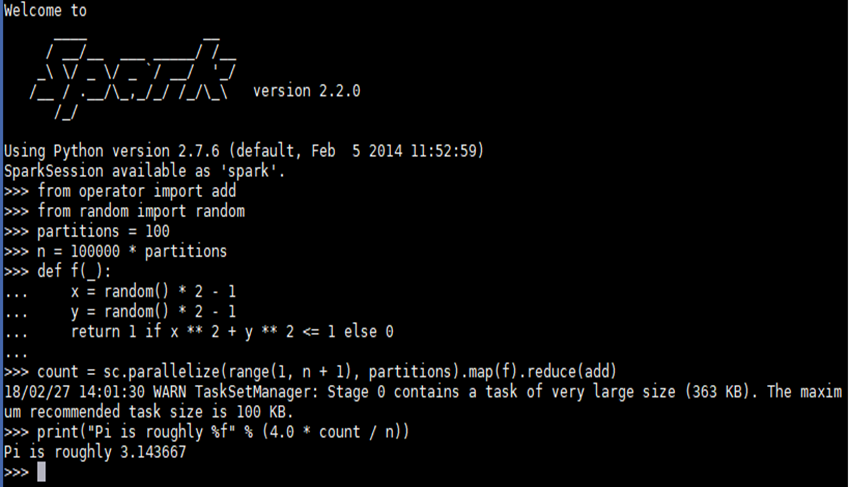

Apache Spark is a fast and general-purpose cluster computing system. It provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports general execution graphs. It also supports a rich set of higher-level tools including Spark SQL for SQL and structured data processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming.

Environment Modules

Run module spider spark to find out what environment modules are available for this application.

System Variables

- HPC_{{#uppercase:spark}}_DIR - installation directory

- HPC_{{#uppercase:spark}}_BIN - executable directory

- HPC_{{#uppercase:spark}}_SLURM - SLURM job script examples

- SPARK_HOME - examples directory

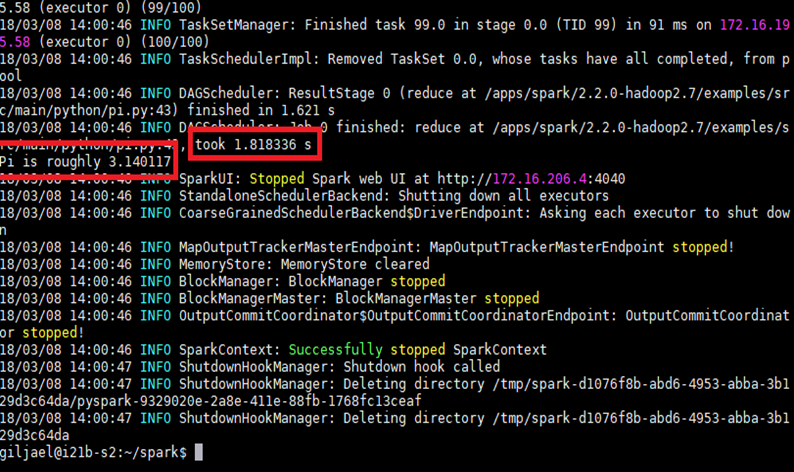

Running Spark on HiperGator

To run your Spark jobs on HiperGator, first, a Spark cluster should be created on HiperGator via SLURM. This section shows a simple example how to create a Spark cluster on HiperGator and how to submit your Spark jobs into the cluster. For details about running Spark jobs on HiperGaotr, please refer to Spark Workshop. For Spark parameters used in this section, please refer to Spark's homepage.

Spark cluster on HiperGator

Expand this section to view instructions for creating a spark cluster in HiperGator.

Spark interactive job

Expand this section to view instructions for starting preset applications without a job script.

Spark batch job

Expand this section to view instructions for starting preset applications without a job script.

Job Script Examples

See the Spark_Job_Scripts page for spark Job script examples.